Colorado's Pioneering AI Legislation

This spring, Colorado became the first state to enact a comprehensive AI law targeting high-risk AI systems. These are systems making decisions that can significantly impact individuals, such as job applications and loan approvals. This sets a precedent for other states to follow.

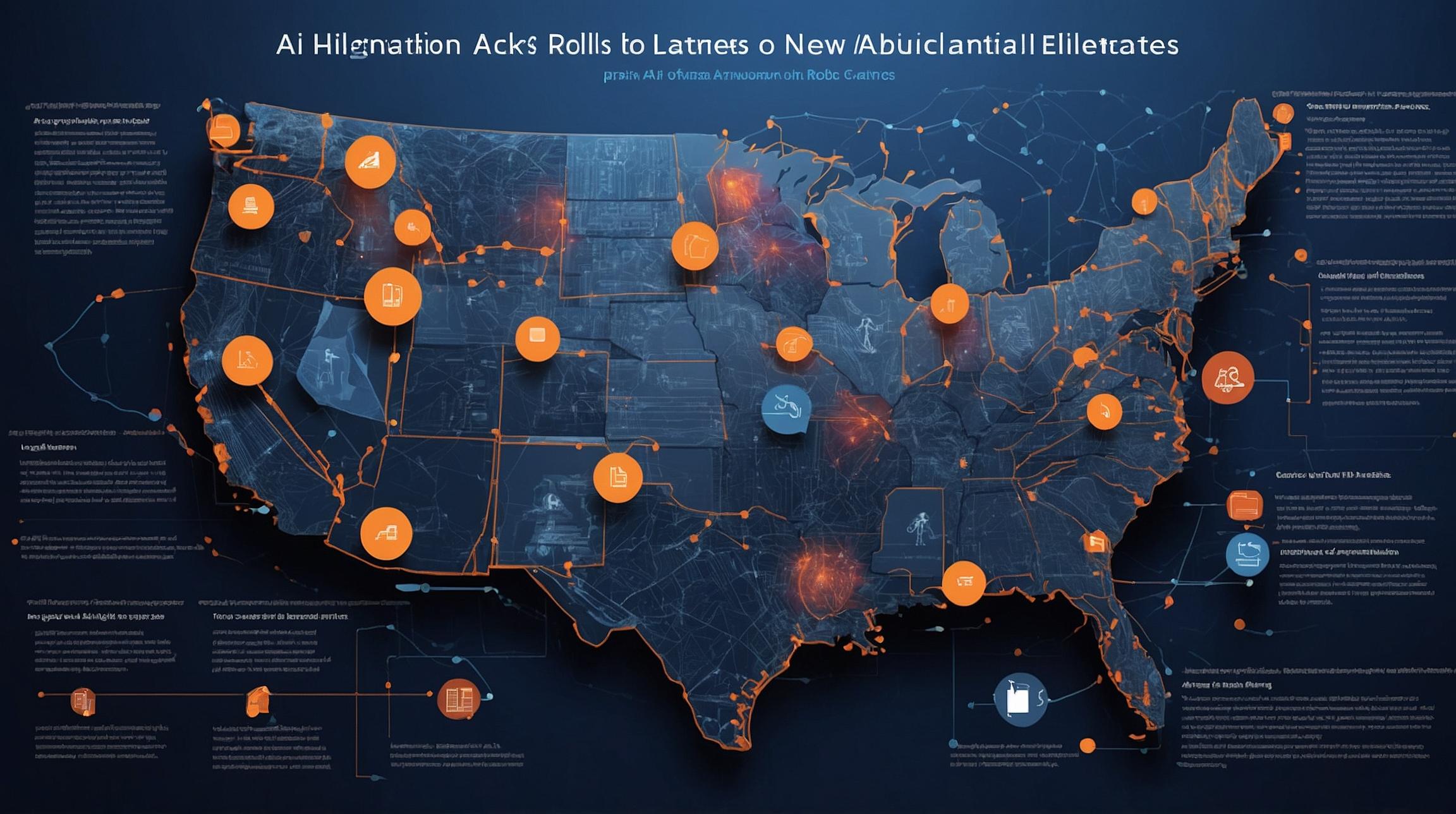

Diverse Approaches to AI Regulation Across States

While some states like Colorado opt for broad legislation, others adopt more targeted regulations focused on specific AI applications or industries. More than 40 states have introduced AI-related bills this year, as noted by the National Conference of State Legislatures.

Industry-Specific AI Regulations

Healthcare

California introduced a bill requiring doctors to oversee AI tools in medical decision-making, ensuring AI aids rather than replaces human judgment.

Insurance

States like Rhode Island, Louisiana, and New York are addressing potential biases in AI-driven insurance decisions, aiming to prevent discrimination.

Technology

California's SB 1047 mandates safety measures for AI developers, including shutdown protocols and cybersecurity tests before model training. This could shape AI norms nationwide, given California's influence.

Regulation of Specific AI Applications

Content Creation

The Tennessee ELVIS Act protects artists from AI-generated voice clones, safeguarding personal rights related to voice and likeness.

Consumer Interactions

The Utah AI Policy Act requires business transparency about AI tool usage in consumer interactions, fostering consumer trust.

Recruitment

New Jersey proposed a bill for transparency in AI-powered hiring, allowing applicants to opt out of AI evaluations during video interviews.

Integration with Consumer Privacy Laws

States like Virginia and Oregon have integrated AI regulations into consumer privacy laws. These laws allow consumers to opt out of automated data profiling impacting access to services like loans and housing.

The Comprehensive Approach of Colorado

The Colorado AI Act, effective 2026, aims to protect against bias in high-risk AI systems, similar to the EU AI Act. It requires transparency from developers and deployers about AI's impact on consumer decisions, potentially guiding other states toward comprehensive AI regulation.