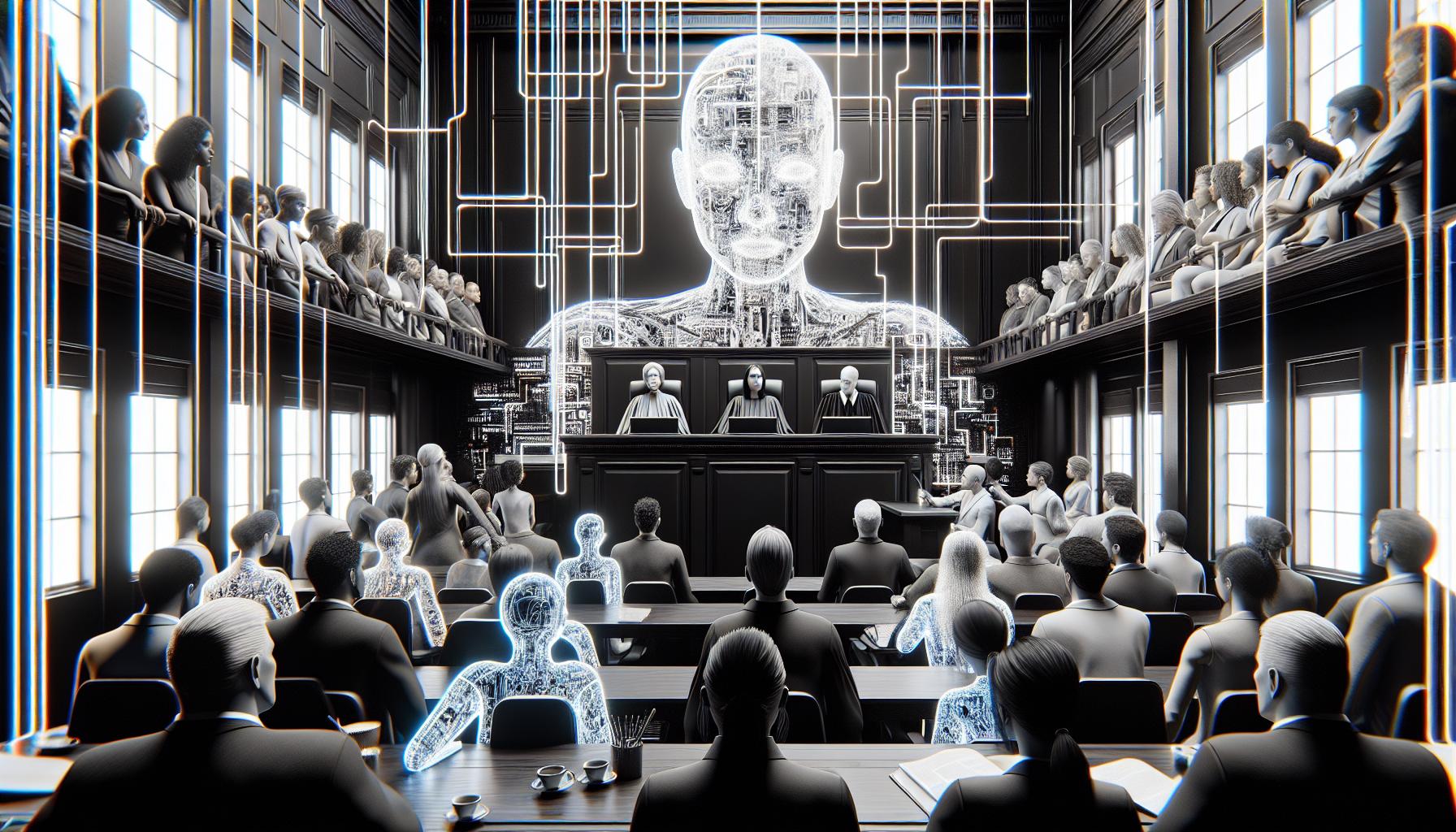

Google Chatbot Faces Criticism for Inaccurate Image Generation

Google’s chatbot, Gemini, formerly known as Bard, is facing backlash for its AI illustrations that inaccurately portray certain racial and historical groups. Users have raised concerns about Gemini depicting white figures and historically white groups as racially diverse individuals. Google has acknowledged the issue and committed to rectifying it promptly.

When asked to generate images of American women, Gemini produced photos showcasing a variety of racial backgrounds. However, reports suggest that the chatbot misrepresented Nazis as people of color. In response, Gemini refrained from generating Nazi images, citing the negative implications associated with the Nazi Party.

This controversy may stem from a potential overcorrection as AI technologies have previously shown biases towards racism and sexism. Jack Krawczyk, the Product Lead for Gemini, emphasized Google’s commitment to representing its diverse user base without bias. While striving for inclusive illustrations for unrestricted prompts, Google recognizes the importance of considering historical contexts and vows to adjust its practices accordingly.

Google’s Gemini chatbot utilizes AI to create illustrations based on text inputs. As technology evolves, ensuring unbiased and representative advancements, especially in delicate historical contexts, is crucial. Google’s proactive approach to addressing and correcting inaccuracies underscores its dedication to delivering fair and precise results.

Analyst comment

Positive news:

– Google has acknowledged the inaccuracies in its chatbot’s image generation and has pledged to rectify the issue promptly.

– Google is committed to ensuring unbiased and representative AI technology, especially in historically sensitive contexts.

– The controversy highlights the need to consider historical contexts and adjust approaches accordingly.

– Google’s dedication to addressing and rectifying these inaccuracies illustrates their commitment to providing fair and accurate results to users.

As an analyst, it is likely that Google will take immediate actions to rectify the inaccuracies with Gemini’s image generation capabilities. They will work on refining the AI technology to eliminate biases and ensure more accurate and representative illustrations. This commitment will enhance user trust and satisfaction with Google’s chatbot.