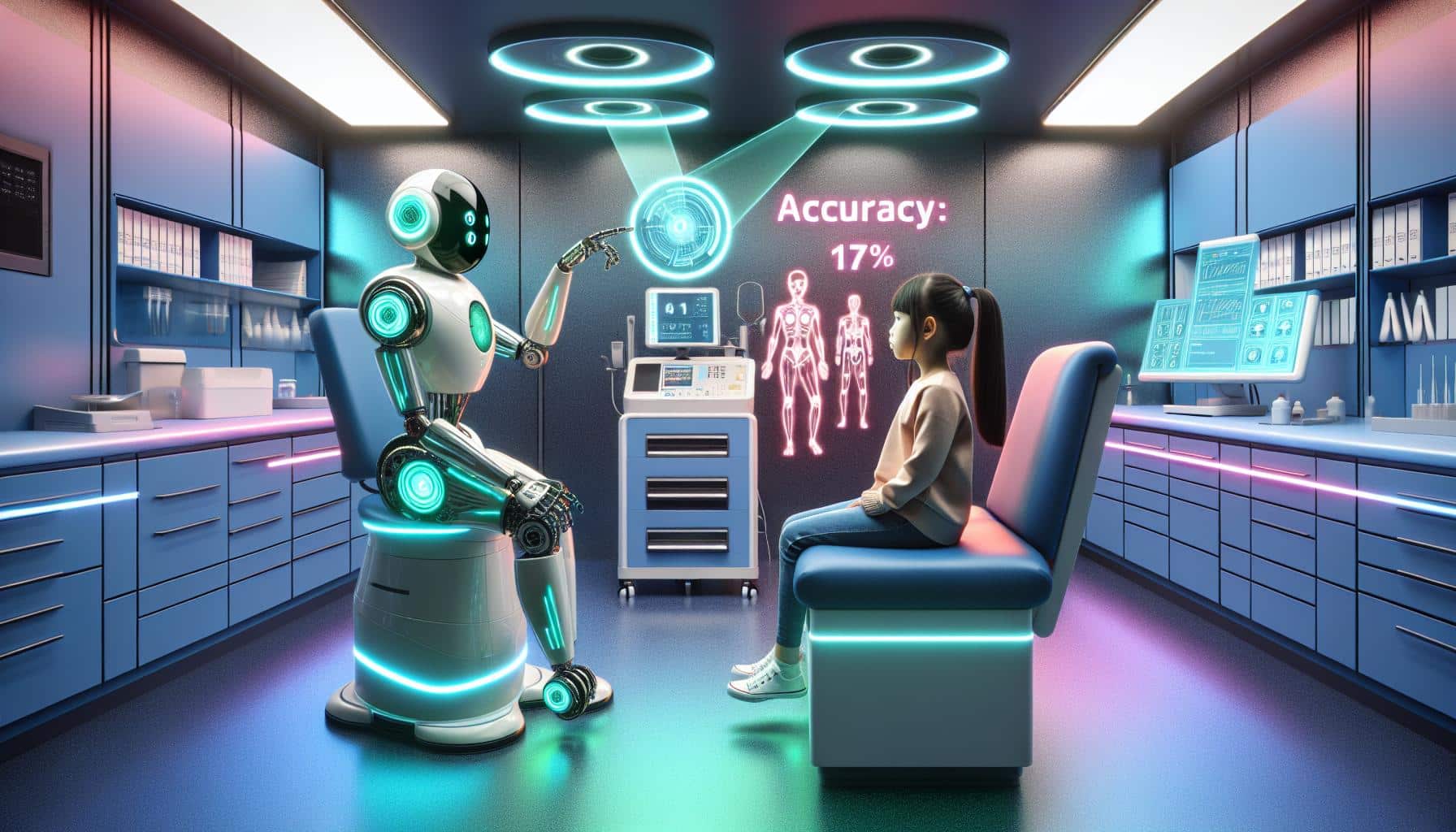

Study Finds ChatGPT AI Chatbot Highly Inaccurate at Pediatric Diagnoses

A recent study conducted by researchers has revealed that the artificial intelligence (AI) chatbot ChatGPT, developed by OpenAI and powered by the GPT-3.5 language model, is highly inaccurate when it comes to making pediatric diagnoses. Published in the journal JAMA Pediatrics, the study sheds light on the limitations of AI in healthcare and emphasizes the importance of clinical experience and expertise.

ChatGPT AI Chatbot Fails to Correctly Diagnose 83% of Pediatric Cases

In the study, researchers evaluated ChatGPT’s ability to diagnose pediatric cases by running 100 patient case challenges sourced from JAMA Pediatrics and The New England Journal of Medicine through the chatbot. They specifically asked ChatGPT to provide a “differential diagnosis and a final diagnosis” for each case. Shockingly, the AI chatbot failed to correctly diagnose 83% of the pediatric cases, highlighting its high levels of inaccuracy.

Researchers Assess ChatGPT’s Ability to Diagnose Pediatric Cases

This study marks the first attempt to assess ChatGPT’s diagnostic capabilities for pediatric cases. A previous study conducted on the newer language model GPT-4 had already demonstrated low diagnostic accuracy for challenging medical cases involving both adults and children. To verify the findings, two medical researchers compared the AI-generated diagnoses with those made by clinicians for each case.

High Levels of Inaccuracy in ChatGPT’s Pediatric Diagnoses, Study Shows

Out of the 100 cases evaluated, ChatGPT provided incorrect diagnoses for 72 cases. Additionally, 11 cases were categorized as “clinically related but too broad to be considered a correct diagnosis.” The study highlights specific cases where ChatGPT’s diagnoses were vastly different from those of physicians. For example, in one case, the chatbot diagnosed a teenager with autism and symptoms of a rash and joint stiffness with immune thrombocytopenic purpura, a misdiagnosis that could have serious consequences.

Mixed Outlook for Healthcare as AI Chatbot’s Diagnostic Performance Raises Concerns

While the study highlights the high levels of inaccuracy in AI-generated pediatric diagnoses, researchers acknowledge that large language models (LLMs) like ChatGPT still hold value as administrative tools for physicians, such as note-taking. However, the study’s findings underscore the importance of clinical experience and expertise in accurate diagnosis. One major limitation identified in ChatGPT is its inability to form relationships between medical disorders, which hinders its diagnostic accuracy. Furthermore, the lack of real-time access to medical information and the potential for outdated knowledge pose additional challenges for AI chatbots in healthcare. Researchers suggest that more selective training and access to up-to-date medical information could improve the diagnostic accuracy of AI chatbots in the future.

Analyst comment

Negative news: Study finds that the ChatGPT AI chatbot is highly inaccurate at pediatric diagnoses, failing to correctly diagnose 83% of pediatric cases. The findings highlight the limitations of AI in healthcare and emphasize the importance of clinical expertise. Market impact: AI chatbots may still hold value as administrative tools, but their diagnostic capabilities are questionable. The need for clinical experience and access to up-to-date medical information remains crucial, suggesting potential challenges for AI chatbots in the healthcare market.