OpenAI’s decision to relax content restrictions reflects a strategic balance between user freedom and safety, leveraging advanced moderation tools. However, this move introduces reputational and regulatory risks amid ongoing legal challenges and societal concerns. !-- wp:paragraph -->

Contents

FinOracleAI — Market ViewAdvocacy Groups Voice ConcernsFinOracleAI — Market ViewEstablishment of Mental Health Expert CouncilAdvocacy Groups Voice ConcernsFinOracleAI — Market ViewEstablishment of Mental Health Expert CouncilAdvocacy Groups Voice ConcernsFinOracleAI — Market ViewEstablishment of Mental Health Expert CouncilAdvocacy Groups Voice ConcernsFinOracleAI — Market ViewRegulatory and Legal ChallengesEstablishment of Mental Health Expert CouncilAdvocacy Groups Voice ConcernsFinOracleAI — Market ViewRegulatory and Legal ChallengesEstablishment of Mental Health Expert CouncilAdvocacy Groups Voice ConcernsFinOracleAI — Market ViewContrasting Statements and Strategic ConsiderationsRegulatory and Legal ChallengesEstablishment of Mental Health Expert CouncilAdvocacy Groups Voice ConcernsFinOracleAI — Market ViewContrasting Statements and Strategic ConsiderationsRegulatory and Legal ChallengesEstablishment of Mental Health Expert CouncilAdvocacy Groups Voice ConcernsFinOracleAI — Market ViewShift in Content Moderation PoliciesContrasting Statements and Strategic ConsiderationsRegulatory and Legal ChallengesEstablishment of Mental Health Expert CouncilAdvocacy Groups Voice ConcernsFinOracleAI — Market ViewShift in Content Moderation PoliciesContrasting Statements and Strategic ConsiderationsRegulatory and Legal ChallengesEstablishment of Mental Health Expert CouncilAdvocacy Groups Voice ConcernsFinOracleAI — Market ViewOpenAI CEO Sam Altman Responds to Content Moderation BacklashShift in Content Moderation PoliciesContrasting Statements and Strategic ConsiderationsRegulatory and Legal ChallengesEstablishment of Mental Health Expert CouncilAdvocacy Groups Voice ConcernsFinOracleAI — Market View

- Opportunities: Expanded user engagement through adult content offerings; differentiated market positioning by respecting adult autonomy.

- Risks: Increased exposure to regulatory scrutiny and potential litigation; backlash from advocacy groups and public opinion; mental health implications for vulnerable users.

- Mitigation: Continued investment in safety tools, parental controls, and expert advisory councils to monitor and minimize harm.

“Sexualized AI chatbots are inherently risky, generating real mental health harms from synthetic intimacy; all in the context of poorly defined industry safety standards.” — Haley McNamara, Executive Director, NCOSEFinOracleAI — Market View

OpenAI’s decision to relax content restrictions reflects a strategic balance between user freedom and safety, leveraging advanced moderation tools. However, this move introduces reputational and regulatory risks amid ongoing legal challenges and societal concerns. !-- wp:paragraph -->- Opportunities: Expanded user engagement through adult content offerings; differentiated market positioning by respecting adult autonomy.

- Risks: Increased exposure to regulatory scrutiny and potential litigation; backlash from advocacy groups and public opinion; mental health implications for vulnerable users.

- Mitigation: Continued investment in safety tools, parental controls, and expert advisory councils to monitor and minimize harm.

Advocacy Groups Voice Concerns

Advocacy organizations such as the National Center on Sexual Exploitation (NCOSE) have strongly opposed OpenAI’s decision to permit erotica on ChatGPT. !-- wp:paragraph -->“Sexualized AI chatbots are inherently risky, generating real mental health harms from synthetic intimacy; all in the context of poorly defined industry safety standards.” — Haley McNamara, Executive Director, NCOSEFinOracleAI — Market View

OpenAI’s decision to relax content restrictions reflects a strategic balance between user freedom and safety, leveraging advanced moderation tools. However, this move introduces reputational and regulatory risks amid ongoing legal challenges and societal concerns. !-- wp:paragraph -->- Opportunities: Expanded user engagement through adult content offerings; differentiated market positioning by respecting adult autonomy.

- Risks: Increased exposure to regulatory scrutiny and potential litigation; backlash from advocacy groups and public opinion; mental health implications for vulnerable users.

- Mitigation: Continued investment in safety tools, parental controls, and expert advisory councils to monitor and minimize harm.

Establishment of Mental Health Expert Council

To better understand AI’s impact on users’ mental health, emotions, and motivation, OpenAI recently formed a council comprising eight experts. This initiative aligns with the company’s broader safety strategy but was overshadowed by Altman’s announcement to loosen content restrictions, which sparked confusion and backlash. !-- wp:paragraph -->Advocacy Groups Voice Concerns

Advocacy organizations such as the National Center on Sexual Exploitation (NCOSE) have strongly opposed OpenAI’s decision to permit erotica on ChatGPT. !-- wp:paragraph -->“Sexualized AI chatbots are inherently risky, generating real mental health harms from synthetic intimacy; all in the context of poorly defined industry safety standards.” — Haley McNamara, Executive Director, NCOSEFinOracleAI — Market View

OpenAI’s decision to relax content restrictions reflects a strategic balance between user freedom and safety, leveraging advanced moderation tools. However, this move introduces reputational and regulatory risks amid ongoing legal challenges and societal concerns. !-- wp:paragraph -->- Opportunities: Expanded user engagement through adult content offerings; differentiated market positioning by respecting adult autonomy.

- Risks: Increased exposure to regulatory scrutiny and potential litigation; backlash from advocacy groups and public opinion; mental health implications for vulnerable users.

- Mitigation: Continued investment in safety tools, parental controls, and expert advisory councils to monitor and minimize harm.

Establishment of Mental Health Expert Council

To better understand AI’s impact on users’ mental health, emotions, and motivation, OpenAI recently formed a council comprising eight experts. This initiative aligns with the company’s broader safety strategy but was overshadowed by Altman’s announcement to loosen content restrictions, which sparked confusion and backlash. !-- wp:paragraph -->Advocacy Groups Voice Concerns

Advocacy organizations such as the National Center on Sexual Exploitation (NCOSE) have strongly opposed OpenAI’s decision to permit erotica on ChatGPT. !-- wp:paragraph -->“Sexualized AI chatbots are inherently risky, generating real mental health harms from synthetic intimacy; all in the context of poorly defined industry safety standards.” — Haley McNamara, Executive Director, NCOSEFinOracleAI — Market View

OpenAI’s decision to relax content restrictions reflects a strategic balance between user freedom and safety, leveraging advanced moderation tools. However, this move introduces reputational and regulatory risks amid ongoing legal challenges and societal concerns. !-- wp:paragraph -->- Opportunities: Expanded user engagement through adult content offerings; differentiated market positioning by respecting adult autonomy.

- Risks: Increased exposure to regulatory scrutiny and potential litigation; backlash from advocacy groups and public opinion; mental health implications for vulnerable users.

- Mitigation: Continued investment in safety tools, parental controls, and expert advisory councils to monitor and minimize harm.

Establishment of Mental Health Expert Council

To better understand AI’s impact on users’ mental health, emotions, and motivation, OpenAI recently formed a council comprising eight experts. This initiative aligns with the company’s broader safety strategy but was overshadowed by Altman’s announcement to loosen content restrictions, which sparked confusion and backlash. !-- wp:paragraph -->Advocacy Groups Voice Concerns

Advocacy organizations such as the National Center on Sexual Exploitation (NCOSE) have strongly opposed OpenAI’s decision to permit erotica on ChatGPT. !-- wp:paragraph -->“Sexualized AI chatbots are inherently risky, generating real mental health harms from synthetic intimacy; all in the context of poorly defined industry safety standards.” — Haley McNamara, Executive Director, NCOSEFinOracleAI — Market View

OpenAI’s decision to relax content restrictions reflects a strategic balance between user freedom and safety, leveraging advanced moderation tools. However, this move introduces reputational and regulatory risks amid ongoing legal challenges and societal concerns. !-- wp:paragraph -->- Opportunities: Expanded user engagement through adult content offerings; differentiated market positioning by respecting adult autonomy.

- Risks: Increased exposure to regulatory scrutiny and potential litigation; backlash from advocacy groups and public opinion; mental health implications for vulnerable users.

- Mitigation: Continued investment in safety tools, parental controls, and expert advisory councils to monitor and minimize harm.

Regulatory and Legal Challenges

OpenAI’s relaxed content policy unfolds amid heightened regulatory scrutiny. In September, the Federal Trade Commission (FTC) launched an inquiry into OpenAI and other companies regarding the impact of chatbots on children and teens. !-- wp:paragraph --> Additionally, OpenAI faces a wrongful death lawsuit from a family who attributes their teenage son’s suicide to interactions with ChatGPT. !-- wp:paragraph --> In response, OpenAI has implemented parental controls and is developing an age prediction system designed to automatically apply teen-appropriate settings for users under 18. !-- wp:paragraph -->Establishment of Mental Health Expert Council

To better understand AI’s impact on users’ mental health, emotions, and motivation, OpenAI recently formed a council comprising eight experts. This initiative aligns with the company’s broader safety strategy but was overshadowed by Altman’s announcement to loosen content restrictions, which sparked confusion and backlash. !-- wp:paragraph -->Advocacy Groups Voice Concerns

Advocacy organizations such as the National Center on Sexual Exploitation (NCOSE) have strongly opposed OpenAI’s decision to permit erotica on ChatGPT. !-- wp:paragraph -->“Sexualized AI chatbots are inherently risky, generating real mental health harms from synthetic intimacy; all in the context of poorly defined industry safety standards.” — Haley McNamara, Executive Director, NCOSEFinOracleAI — Market View

OpenAI’s decision to relax content restrictions reflects a strategic balance between user freedom and safety, leveraging advanced moderation tools. However, this move introduces reputational and regulatory risks amid ongoing legal challenges and societal concerns. !-- wp:paragraph -->- Opportunities: Expanded user engagement through adult content offerings; differentiated market positioning by respecting adult autonomy.

- Risks: Increased exposure to regulatory scrutiny and potential litigation; backlash from advocacy groups and public opinion; mental health implications for vulnerable users.

- Mitigation: Continued investment in safety tools, parental controls, and expert advisory councils to monitor and minimize harm.

Regulatory and Legal Challenges

OpenAI’s relaxed content policy unfolds amid heightened regulatory scrutiny. In September, the Federal Trade Commission (FTC) launched an inquiry into OpenAI and other companies regarding the impact of chatbots on children and teens. !-- wp:paragraph --> Additionally, OpenAI faces a wrongful death lawsuit from a family who attributes their teenage son’s suicide to interactions with ChatGPT. !-- wp:paragraph --> In response, OpenAI has implemented parental controls and is developing an age prediction system designed to automatically apply teen-appropriate settings for users under 18. !-- wp:paragraph -->Establishment of Mental Health Expert Council

To better understand AI’s impact on users’ mental health, emotions, and motivation, OpenAI recently formed a council comprising eight experts. This initiative aligns with the company’s broader safety strategy but was overshadowed by Altman’s announcement to loosen content restrictions, which sparked confusion and backlash. !-- wp:paragraph -->Advocacy Groups Voice Concerns

Advocacy organizations such as the National Center on Sexual Exploitation (NCOSE) have strongly opposed OpenAI’s decision to permit erotica on ChatGPT. !-- wp:paragraph -->“Sexualized AI chatbots are inherently risky, generating real mental health harms from synthetic intimacy; all in the context of poorly defined industry safety standards.” — Haley McNamara, Executive Director, NCOSEFinOracleAI — Market View

OpenAI’s decision to relax content restrictions reflects a strategic balance between user freedom and safety, leveraging advanced moderation tools. However, this move introduces reputational and regulatory risks amid ongoing legal challenges and societal concerns. !-- wp:paragraph -->- Opportunities: Expanded user engagement through adult content offerings; differentiated market positioning by respecting adult autonomy.

- Risks: Increased exposure to regulatory scrutiny and potential litigation; backlash from advocacy groups and public opinion; mental health implications for vulnerable users.

- Mitigation: Continued investment in safety tools, parental controls, and expert advisory councils to monitor and minimize harm.

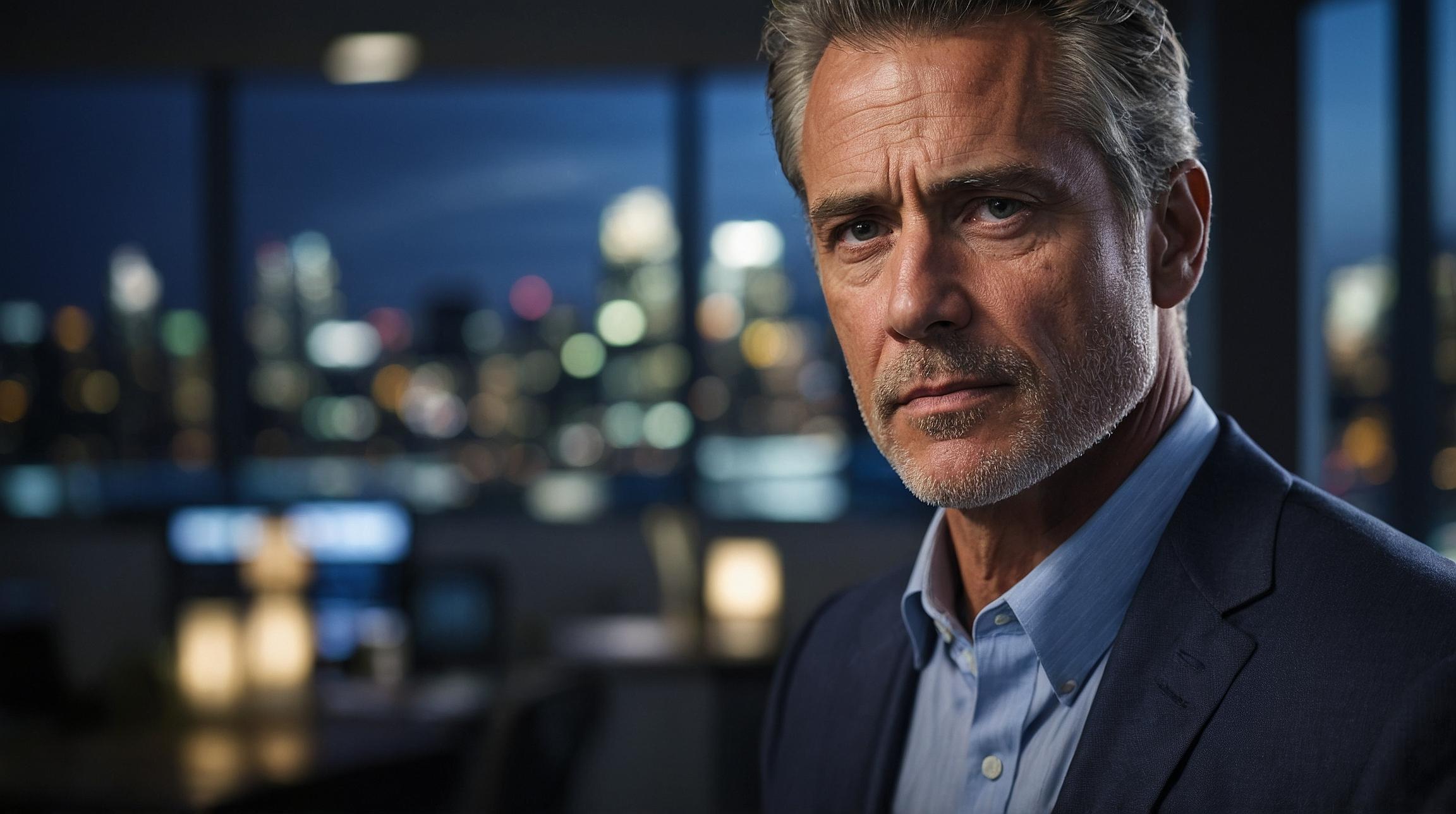

“We care very much about the principle of treating adult users like adults, but we will not allow things that cause harm to others.” — Sam Altman, OpenAI CEOContrasting Statements and Strategic Considerations

Altman’s recent statements contrast with earlier remarks from August, where he expressed pride in OpenAI’s restraint against features that could boost engagement but conflict with long-term goals, such as a “sex bot avatar.” !-- wp:paragraph --> “There’s a lot of short-term stuff we could do that would really juice growth or revenue and be very misaligned with that long-term goal,” Altman said during a podcast appearance. !-- wp:paragraph -->Regulatory and Legal Challenges

OpenAI’s relaxed content policy unfolds amid heightened regulatory scrutiny. In September, the Federal Trade Commission (FTC) launched an inquiry into OpenAI and other companies regarding the impact of chatbots on children and teens. !-- wp:paragraph --> Additionally, OpenAI faces a wrongful death lawsuit from a family who attributes their teenage son’s suicide to interactions with ChatGPT. !-- wp:paragraph --> In response, OpenAI has implemented parental controls and is developing an age prediction system designed to automatically apply teen-appropriate settings for users under 18. !-- wp:paragraph -->Establishment of Mental Health Expert Council

To better understand AI’s impact on users’ mental health, emotions, and motivation, OpenAI recently formed a council comprising eight experts. This initiative aligns with the company’s broader safety strategy but was overshadowed by Altman’s announcement to loosen content restrictions, which sparked confusion and backlash. !-- wp:paragraph -->Advocacy Groups Voice Concerns

Advocacy organizations such as the National Center on Sexual Exploitation (NCOSE) have strongly opposed OpenAI’s decision to permit erotica on ChatGPT. !-- wp:paragraph -->“Sexualized AI chatbots are inherently risky, generating real mental health harms from synthetic intimacy; all in the context of poorly defined industry safety standards.” — Haley McNamara, Executive Director, NCOSEFinOracleAI — Market View

OpenAI’s decision to relax content restrictions reflects a strategic balance between user freedom and safety, leveraging advanced moderation tools. However, this move introduces reputational and regulatory risks amid ongoing legal challenges and societal concerns. !-- wp:paragraph -->- Opportunities: Expanded user engagement through adult content offerings; differentiated market positioning by respecting adult autonomy.

- Risks: Increased exposure to regulatory scrutiny and potential litigation; backlash from advocacy groups and public opinion; mental health implications for vulnerable users.

- Mitigation: Continued investment in safety tools, parental controls, and expert advisory councils to monitor and minimize harm.

“We care very much about the principle of treating adult users like adults, but we will not allow things that cause harm to others.” — Sam Altman, OpenAI CEOContrasting Statements and Strategic Considerations

Altman’s recent statements contrast with earlier remarks from August, where he expressed pride in OpenAI’s restraint against features that could boost engagement but conflict with long-term goals, such as a “sex bot avatar.” !-- wp:paragraph --> “There’s a lot of short-term stuff we could do that would really juice growth or revenue and be very misaligned with that long-term goal,” Altman said during a podcast appearance. !-- wp:paragraph -->Regulatory and Legal Challenges

OpenAI’s relaxed content policy unfolds amid heightened regulatory scrutiny. In September, the Federal Trade Commission (FTC) launched an inquiry into OpenAI and other companies regarding the impact of chatbots on children and teens. !-- wp:paragraph --> Additionally, OpenAI faces a wrongful death lawsuit from a family who attributes their teenage son’s suicide to interactions with ChatGPT. !-- wp:paragraph --> In response, OpenAI has implemented parental controls and is developing an age prediction system designed to automatically apply teen-appropriate settings for users under 18. !-- wp:paragraph -->Establishment of Mental Health Expert Council

To better understand AI’s impact on users’ mental health, emotions, and motivation, OpenAI recently formed a council comprising eight experts. This initiative aligns with the company’s broader safety strategy but was overshadowed by Altman’s announcement to loosen content restrictions, which sparked confusion and backlash. !-- wp:paragraph -->Advocacy Groups Voice Concerns

Advocacy organizations such as the National Center on Sexual Exploitation (NCOSE) have strongly opposed OpenAI’s decision to permit erotica on ChatGPT. !-- wp:paragraph -->“Sexualized AI chatbots are inherently risky, generating real mental health harms from synthetic intimacy; all in the context of poorly defined industry safety standards.” — Haley McNamara, Executive Director, NCOSEFinOracleAI — Market View

OpenAI’s decision to relax content restrictions reflects a strategic balance between user freedom and safety, leveraging advanced moderation tools. However, this move introduces reputational and regulatory risks amid ongoing legal challenges and societal concerns. !-- wp:paragraph -->- Opportunities: Expanded user engagement through adult content offerings; differentiated market positioning by respecting adult autonomy.

- Risks: Increased exposure to regulatory scrutiny and potential litigation; backlash from advocacy groups and public opinion; mental health implications for vulnerable users.

- Mitigation: Continued investment in safety tools, parental controls, and expert advisory councils to monitor and minimize harm.

Shift in Content Moderation Policies

In a post on X (formerly Twitter), Altman clarified OpenAI’s evolving stance. The company intends to “safely relax” most restrictions, supported by new tools aimed at mitigating serious mental health risks. Since December, OpenAI has permitted adult content, including erotica, exclusively for users who verify their age. !-- wp:paragraph --> Altman compared this approach to existing societal norms, stating, “In the same way that society differentiates other appropriate boundaries (R-rated movies, for example) we want to do a similar thing here.” !-- wp:paragraph -->“We care very much about the principle of treating adult users like adults, but we will not allow things that cause harm to others.” — Sam Altman, OpenAI CEOContrasting Statements and Strategic Considerations

Altman’s recent statements contrast with earlier remarks from August, where he expressed pride in OpenAI’s restraint against features that could boost engagement but conflict with long-term goals, such as a “sex bot avatar.” !-- wp:paragraph --> “There’s a lot of short-term stuff we could do that would really juice growth or revenue and be very misaligned with that long-term goal,” Altman said during a podcast appearance. !-- wp:paragraph -->Regulatory and Legal Challenges

OpenAI’s relaxed content policy unfolds amid heightened regulatory scrutiny. In September, the Federal Trade Commission (FTC) launched an inquiry into OpenAI and other companies regarding the impact of chatbots on children and teens. !-- wp:paragraph --> Additionally, OpenAI faces a wrongful death lawsuit from a family who attributes their teenage son’s suicide to interactions with ChatGPT. !-- wp:paragraph --> In response, OpenAI has implemented parental controls and is developing an age prediction system designed to automatically apply teen-appropriate settings for users under 18. !-- wp:paragraph -->Establishment of Mental Health Expert Council

To better understand AI’s impact on users’ mental health, emotions, and motivation, OpenAI recently formed a council comprising eight experts. This initiative aligns with the company’s broader safety strategy but was overshadowed by Altman’s announcement to loosen content restrictions, which sparked confusion and backlash. !-- wp:paragraph -->Advocacy Groups Voice Concerns

Advocacy organizations such as the National Center on Sexual Exploitation (NCOSE) have strongly opposed OpenAI’s decision to permit erotica on ChatGPT. !-- wp:paragraph -->“Sexualized AI chatbots are inherently risky, generating real mental health harms from synthetic intimacy; all in the context of poorly defined industry safety standards.” — Haley McNamara, Executive Director, NCOSEFinOracleAI — Market View

OpenAI’s decision to relax content restrictions reflects a strategic balance between user freedom and safety, leveraging advanced moderation tools. However, this move introduces reputational and regulatory risks amid ongoing legal challenges and societal concerns. !-- wp:paragraph -->- Opportunities: Expanded user engagement through adult content offerings; differentiated market positioning by respecting adult autonomy.

- Risks: Increased exposure to regulatory scrutiny and potential litigation; backlash from advocacy groups and public opinion; mental health implications for vulnerable users.

- Mitigation: Continued investment in safety tools, parental controls, and expert advisory councils to monitor and minimize harm.

Shift in Content Moderation Policies

In a post on X (formerly Twitter), Altman clarified OpenAI’s evolving stance. The company intends to “safely relax” most restrictions, supported by new tools aimed at mitigating serious mental health risks. Since December, OpenAI has permitted adult content, including erotica, exclusively for users who verify their age. !-- wp:paragraph --> Altman compared this approach to existing societal norms, stating, “In the same way that society differentiates other appropriate boundaries (R-rated movies, for example) we want to do a similar thing here.” !-- wp:paragraph -->“We care very much about the principle of treating adult users like adults, but we will not allow things that cause harm to others.” — Sam Altman, OpenAI CEOContrasting Statements and Strategic Considerations

Altman’s recent statements contrast with earlier remarks from August, where he expressed pride in OpenAI’s restraint against features that could boost engagement but conflict with long-term goals, such as a “sex bot avatar.” !-- wp:paragraph --> “There’s a lot of short-term stuff we could do that would really juice growth or revenue and be very misaligned with that long-term goal,” Altman said during a podcast appearance. !-- wp:paragraph -->Regulatory and Legal Challenges

OpenAI’s relaxed content policy unfolds amid heightened regulatory scrutiny. In September, the Federal Trade Commission (FTC) launched an inquiry into OpenAI and other companies regarding the impact of chatbots on children and teens. !-- wp:paragraph --> Additionally, OpenAI faces a wrongful death lawsuit from a family who attributes their teenage son’s suicide to interactions with ChatGPT. !-- wp:paragraph --> In response, OpenAI has implemented parental controls and is developing an age prediction system designed to automatically apply teen-appropriate settings for users under 18. !-- wp:paragraph -->Establishment of Mental Health Expert Council

To better understand AI’s impact on users’ mental health, emotions, and motivation, OpenAI recently formed a council comprising eight experts. This initiative aligns with the company’s broader safety strategy but was overshadowed by Altman’s announcement to loosen content restrictions, which sparked confusion and backlash. !-- wp:paragraph -->Advocacy Groups Voice Concerns

Advocacy organizations such as the National Center on Sexual Exploitation (NCOSE) have strongly opposed OpenAI’s decision to permit erotica on ChatGPT. !-- wp:paragraph -->“Sexualized AI chatbots are inherently risky, generating real mental health harms from synthetic intimacy; all in the context of poorly defined industry safety standards.” — Haley McNamara, Executive Director, NCOSEFinOracleAI — Market View

OpenAI’s decision to relax content restrictions reflects a strategic balance between user freedom and safety, leveraging advanced moderation tools. However, this move introduces reputational and regulatory risks amid ongoing legal challenges and societal concerns. !-- wp:paragraph -->- Opportunities: Expanded user engagement through adult content offerings; differentiated market positioning by respecting adult autonomy.

- Risks: Increased exposure to regulatory scrutiny and potential litigation; backlash from advocacy groups and public opinion; mental health implications for vulnerable users.

- Mitigation: Continued investment in safety tools, parental controls, and expert advisory councils to monitor and minimize harm.

OpenAI CEO Sam Altman Responds to Content Moderation Backlash

OpenAI CEO Sam Altman addressed public criticism on Wednesday following the company’s decision to ease restrictions on ChatGPT’s content, including allowing erotica for verified adult users. Altman emphasized that OpenAI is “not the elected moral police of the world,” signaling a shift toward more permissive content policies backed by enhanced safety mechanisms. !-- wp:paragraph --> Altman’s comments came amid increased scrutiny over how AI platforms moderate content, particularly concerning the protection of minors and mental health implications. !-- wp:paragraph -->Shift in Content Moderation Policies

In a post on X (formerly Twitter), Altman clarified OpenAI’s evolving stance. The company intends to “safely relax” most restrictions, supported by new tools aimed at mitigating serious mental health risks. Since December, OpenAI has permitted adult content, including erotica, exclusively for users who verify their age. !-- wp:paragraph --> Altman compared this approach to existing societal norms, stating, “In the same way that society differentiates other appropriate boundaries (R-rated movies, for example) we want to do a similar thing here.” !-- wp:paragraph -->“We care very much about the principle of treating adult users like adults, but we will not allow things that cause harm to others.” — Sam Altman, OpenAI CEOContrasting Statements and Strategic Considerations

Altman’s recent statements contrast with earlier remarks from August, where he expressed pride in OpenAI’s restraint against features that could boost engagement but conflict with long-term goals, such as a “sex bot avatar.” !-- wp:paragraph --> “There’s a lot of short-term stuff we could do that would really juice growth or revenue and be very misaligned with that long-term goal,” Altman said during a podcast appearance. !-- wp:paragraph -->Regulatory and Legal Challenges

OpenAI’s relaxed content policy unfolds amid heightened regulatory scrutiny. In September, the Federal Trade Commission (FTC) launched an inquiry into OpenAI and other companies regarding the impact of chatbots on children and teens. !-- wp:paragraph --> Additionally, OpenAI faces a wrongful death lawsuit from a family who attributes their teenage son’s suicide to interactions with ChatGPT. !-- wp:paragraph --> In response, OpenAI has implemented parental controls and is developing an age prediction system designed to automatically apply teen-appropriate settings for users under 18. !-- wp:paragraph -->Establishment of Mental Health Expert Council

To better understand AI’s impact on users’ mental health, emotions, and motivation, OpenAI recently formed a council comprising eight experts. This initiative aligns with the company’s broader safety strategy but was overshadowed by Altman’s announcement to loosen content restrictions, which sparked confusion and backlash. !-- wp:paragraph -->Advocacy Groups Voice Concerns

Advocacy organizations such as the National Center on Sexual Exploitation (NCOSE) have strongly opposed OpenAI’s decision to permit erotica on ChatGPT. !-- wp:paragraph -->“Sexualized AI chatbots are inherently risky, generating real mental health harms from synthetic intimacy; all in the context of poorly defined industry safety standards.” — Haley McNamara, Executive Director, NCOSEFinOracleAI — Market View

OpenAI’s decision to relax content restrictions reflects a strategic balance between user freedom and safety, leveraging advanced moderation tools. However, this move introduces reputational and regulatory risks amid ongoing legal challenges and societal concerns. !-- wp:paragraph -->- Opportunities: Expanded user engagement through adult content offerings; differentiated market positioning by respecting adult autonomy.

- Risks: Increased exposure to regulatory scrutiny and potential litigation; backlash from advocacy groups and public opinion; mental health implications for vulnerable users.

- Mitigation: Continued investment in safety tools, parental controls, and expert advisory councils to monitor and minimize harm.