AI and its Misalignment with Human Ideology

Advances in technology bring challenges and opportunities that were unimaginable just a few years ago. The next decade promises even smaller devices, larger networks, and computers that can extend human thought. However, the public’s obsession with artificial intelligence (AI) is diverting attention away from more pressing threats to society. Rather than fearing a machine-driven apocalypse, it is the flawed human decisions accelerated and amplified by AI that pose a real danger.

The Importance of Separating Facts from Delusion

The spread of deliberate false ideas in our discourse is a significant threat to our society and global progress. Without a shared understanding of objective scientific insights and a true historical record, it becomes challenging to collaborate for the greater good. Unfortunately, there is currently no filtration system that separates shibboleths from facts when it comes to feeding information to AI computers.

The Hazards of Training AI Based on Discriminatory Precedent

The real hazard lies in the flawed human decisions that are perpetuated by AI. Many widely accepted beliefs and calculations, such as financial risk assessment and criminal recidivism, are based on erroneous assumptions. Training AI systems on such discriminatory precedent leads to biased and unfair outcomes. Fixing flawed ideology within human memory or computer storage is a complex task that requires time and ethical considerations.

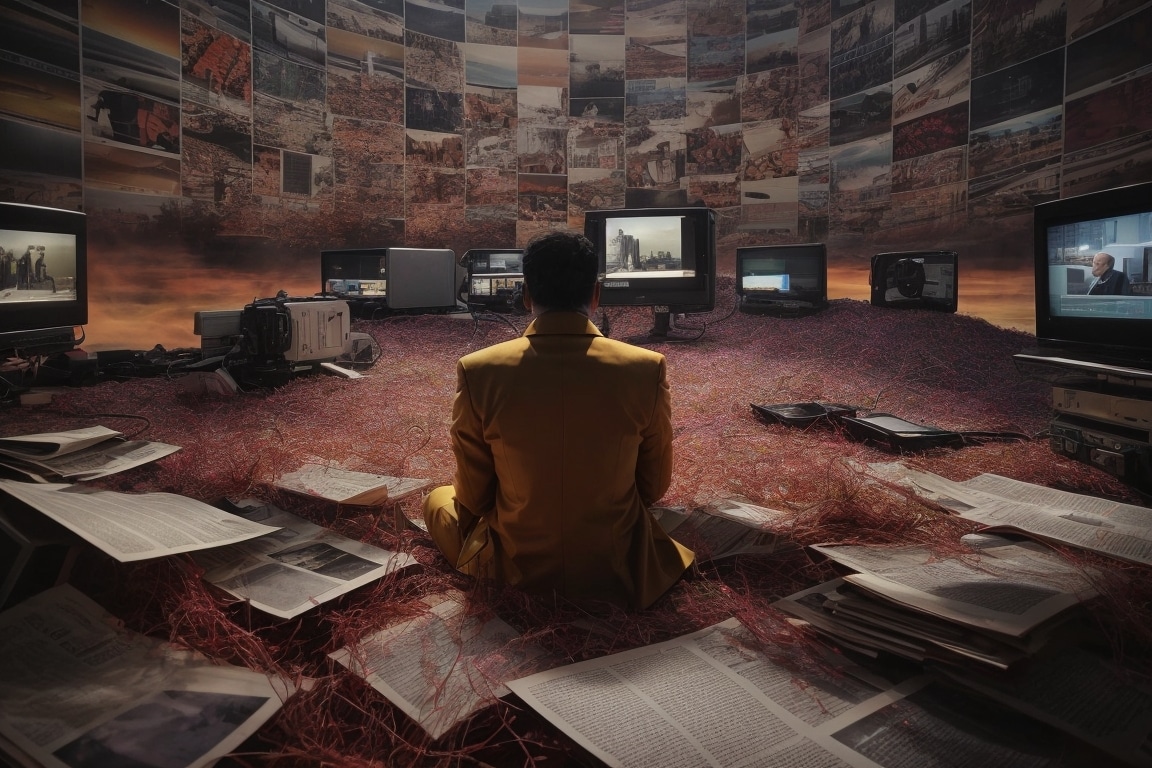

Misinformation as a Source of Contamination

Foreign interests have weaponized misinformation and injected it into our popular press and social media platforms. This harmful misinformation becomes ingrained in the training sets that teach AI systems how to communicate. While AI itself lacks ambition and judgment, it excels at pattern recognition. Therefore, if we fail to actively address this issue and align training sets with our expectations and laws, it will take a long time to remove toxic content from AI systems.

AI Misalignment: China’s Advancements vs. The United States

China has become a dominant player in the global market, particularly in the field of technology. Policymakers once expected China to be a large market and friendly competitor, but the reality is that it has become a fierce commercial rival and a significant military antagonist to the United States. China produces a much larger number of engineering students and Ph.D.s than the U.S., giving them a substantial advantage in the field of AI.

The True Danger: The Integrity of AI Training

The real threat associated with AI is not the technology itself, but rather the integrity of its training. Similar to a human brain, AI “learns” what it is taught. However, today’s computer models are susceptible to incorporating incorrect ideas and disproven theories into their conclusions. This leads to the spread of spiritual superstition, entrenched suspicion, and fabricated conflicts within society.

Focusing on Scientifically Proven and Socially Aligned Teachings

To address the misalignment between AI and human ideology, it is crucial to focus on providing AI systems with scientifically proven, socially aligned, and integrity-tested information. This requires a deliberate effort to ensure that the teachings given to AI machines are accurate, fair, and aligned with our values. It is through human ingenuity and attention to detail that we can overcome the challenges posed by AI.

Disclaimer: This article is based on the opinions of Peter L. Levin and does not represent the views of OpenAI or its partners.

Analyst comment

Neutral news. The article highlights the potential dangers of misalignment between AI and human ideology. It emphasizes the need to address flawed human decisions and discriminatory precedent in AI training. It also mentions the spread of misinformation and China’s advancements in AI. Analyst’s prediction: Market will see increased focus on ethical considerations and accuracy in AI training, with efforts to align AI systems with human values.